I may have found a way to workaround the problem but am getting errors. I defined \epsilon_t^s b using pm.Normal like before. I pass this into the drift coefficient of the variance process SDE, as before. For the return process, I can’t use pm.Deterministic because I can’t pass in the observed returns. But what if I specify the return process as an SDE with 1) \epsilon_t^s passed into the drift coefficient and 2) a zero diffusion coefficient?

import numpy as np

import matplotlib.pyplot as plt

import pymc3 as pm

from pymc3.distributions.timeseries import EulerMaruyama

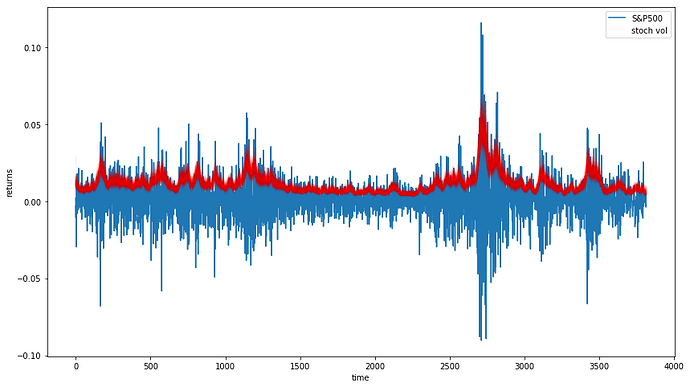

returns = np.genfromtxt(pm.get_data("SP500.csv"))

def Vt_sde(Vt, sigma_v, rho, beta_v, alpha_v, error_s):

return alpha_v + beta_v * Vt + sigma_v * np.sqrt(Vt) * rho * error_s, sigma_v * np.sqrt(Vt) * np.sqrt(1-rho*rho)

def Yt_sde(Vt, error_s):

return np.sqrt(Vt) * error_s,0

with pm.Model() as sp500_model:

alpha_v=pm.Normal('alpha',0,sd=100)

beta_v=pm.Normal('beta',0,sd=10)

sigma_v=pm.InverseGamma('sigmaV',2.5,0.1)

rho=pm.Uniform('rho',-.9,.9)

error_s = pm.Normal('error_s',mu=0.0,sd=1)

Vt = EulerMaruyama('Vt', 1.0, Vt_sde, [sigma_v, rho, alpha_v, beta_v, error_s], shape=len(returns),testval=np.repeat(.01,len(returns)))

Yt = EulerMaruyama('Yt', 1.0,Yt_sde, [Vt, error_s], observed=returns)

Unfortunately I am getting the following error…

TypeError: Yt_sde() takes 2 positional arguments but 3 were given

I’m not sure how pm.Potential would help here. I can’t pass an observed variable into pm.Potential

Is there a reason why pm.Deterministic does not allow you to pass in an observed variable? Are there any workarounds?

This might work. I can work out the log probability of the posterior for this problem, which I can implement as class similar to MVGuassianRandomWalk. How would I implement an example like what you’ve described for a simple MvGuassianRandomWalk?

Thanks everyone for the help I really hope I can get this model implemented somehow!