Hmmm interesting, I think there’s some indexing weirdness somewhere, and have an ill-educated suggestion at the end…

My path so far:

(with mild brainache from trying to get my head around xarray, ha)

(1) I fed my model object (and then az.from_pymc3 aka az.data.io_pymc3_3x.from_pymc3 in my v3 usage) with the dims as you suggested:

# snippets involved

...

b0_names = ['gc_omega_b0', 'gc_theta_b0', 'ulr_b0']

...

idata_kwargs = {'dims': {'lkjcc_corr': ['b0_names', 'b0_names2']}}

...

coords.update({'b0_names': b0_names, 'b0_names2': b0_names})

...

k.update(**idata_kwargs)

az.from_pymc3(**k)

… the model samples fine and the posterior idata looks as intended: with coords b0_names and b0_names2 in the right order.

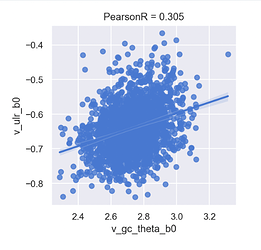

(2) The pairs plot looks the same as I inserted above, so I also wanted to make sure my eyes aren’t playing tricks on me and the position 4 subplot really is positive:

v_ulr_b0 = mdlb6p.idata.posterior['b0'].sel(b0_names='ulr_b0').stack(dims=('chain', 'draw')).values

v_gc_theta_b0 = mdlb6p.idata.posterior['b0'].sel(b0_names='gc_theta_b0').stack(dims=('chain', 'draw')).values

from scipy import stats

rho, _ = stats.pearsonr(v_gc_theta_b0, v_ulr_b0)

f, axs = plt.subplots(1, 1, figsize=(4, 4))

ax = sns.regplot(x=v_gc_theta_b0, y=v_ulr_b0, ax=axs)

_ = ax.set(title=f'PearsonR = {rho:.3f}', xlabel='v_gc_theta_b0', ylabel='v_ulr_b0')

… yep thats the same plot, so the b0 selection is named correctly (and now we have an approximate correlation value to look for +0.3)

In fact here’s all 3 for reference:

(3) I now select from lkjcc_corr as you suggested, remembering that the order doesn’t matter (we could equally do b0_names='gc_theta_b0', b0_names2='ulr_b0')

corr_means = mdlb6p.idata.posterior["lkjcc_corr"].mean(("chain", "draw"))

corr_means.sel(b0_names='ulr_b0', b0_names2='gc_theta_b0')

… we get -0.18, that’s definitely not a positive correlation value

(4) A quick triple check using alternative indexing:

mdlb6p.idata.posterior['lkjcc_corr'].values[:, :, 2, 1].mean()

> -0.18642255020919818

mdlb6p.idata.posterior['lkjcc_corr'].loc[:, :, 'ulr_b0', 'gc_theta_b0'].mean()

> <xarray.DataArray 'lkjcc_corr' ()>

array(-0.18642255)

Coordinates:

b0_names <U11 'ulr_b0'

b0_names2 <U11 'gc_theta_b0'

(5) Okay what about the others? Where’s the positive value around 0.3 ?

There it is, in lower-triangle position 1, aka i=1, j=0 aka

corr_means.sel(b0_names='gc_omega_b0', b0_names2='gc_theta_b0')

> array(0.31553846)

…

So, I think I’ve ruled out indexing issues and coords and dims. The lkjcc_corr matrix appears to be flip-rotated, or the indexing has been reversed along each axis somehow

At this point I start to run out of ideas…

I’ve looked into the code that generates the corr (permalink to v3 branch that I’m using) (note that the v4 code uses the same logic)

chol = pm.expand_packed_triangular(n, packed_chol, lower=True)

# compute covariance matrix

cov = tt.dot(chol, chol.T)

# extract standard deviations and rho

stds = tt.sqrt(tt.diag(cov))

inv_stds = 1 / stds

corr = inv_stds[None, :] * cov * inv_stds[:, None]

if store_in_trace:

stds = pm.Deterministic(f"{name}_stds", stds)

corr = pm.Deterministic(f"{name}_corr", corr)

Is is possible that the tt.dot does a flip-rotate? Or perhaps the indexing for inv_stds is in the wrong order and we want something like corr = inv_stds[:, None] * cov * inv_stds[None, :] ?

I’ll try a couple of things this end…

<EDIT #1 additional exploration>

Right, so I can easily replicate the calculations for lkjcc_corr outside of the model by just reusing that code as:

packed = mdlb6p.idata.posterior['lkjcc'].mean(('chain', 'draw')).values

print(packed) # so you can replicate

> [ 0.98541743 0.43684498 1.25796973 -0.10103472 -0.14227276 0.75882839]

n = 3

out = np.zeros((n, n))

idxs = np.tril_indices(n)

# hacky version of tt.set_subtensor

for p, tup in enumerate(zip(*idxs)):

out[tup] = packed[p]

chol = out.copy()

cov = np.dot(chol, chol.T)

stds = np.sqrt(np.diag(cov))

inv_stds = 1 / stds

corr = inv_stds[None, :] * cov * inv_stds[:, None]

print(corr)

> [[ 1. 0.32804515 -0.12975902]

[ 0.32804515 1. -0.2151765 ]

[-0.12975902 -0.2151765 1. ]]

Which looks broadly the same to me. I’m at a loss…

<EDIT #2 mucking around with flip-rotate on cov and pretty much out of my depth, and trying to relearn matrix math…>

In the above code snippet, if I flip rotate cov, I do get the ordering I would expect:

cov = np.rot90(np.fliplr(cov), k=3)

...

print(corr)

> [[ 1. -0.2151765 -0.12975902]

[-0.2151765 1. 0.32804515]

[-0.12975902 0.32804515 1. ]]

Is this interesting? maybe…

The code uses tt.dot(), but since these are 2D matrices, it would be more appropriate (following the numpy concept) to use tt.matmul which doesn’t exist

If it did exist, I might try to play with the order kwarg, which does exist in numpy, so let’s play with it in numpy:

cov = np.matmul(chol, chol.T, order='K')

cov1 = np.matmul(chol, chol.T, order='C')

cov2 = np.matmul(chol, chol.T, order='F')

print(cov == cov1)

print(cov == cov2)

> [[ True True True]

[ True True True]

[ True True True]]

[[ True True True]

[ True True True]

[ True True True]]

… nope that’s not it, and I’m starting to lose the plot!