Hi, I’m new here. While there are many examples online of different models one can make with pymc, I keep having trouble doing something on my own. I have tried pymc multiple times but I think I eventually always run into an issue with dims. I thought maybe try and ask for help once, instead of endless trial and error ![]()

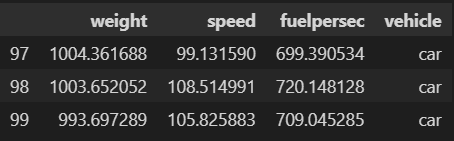

I made a silly toy dataset to do a pretty basic linear regression to answer a simple question. Are trucks more fuel efficient than cars? Fuel per sec is determined by speed and weight of the vehicle. More speed and weight is more fuel use. Cars and trucks drive the same speeds but trucks are heavier so they use more fuel. But what if we account for the effect of weight itself on fuel use? Maybe trucks have more efficient engines so they use less fuel per sec than cars if they weighed the same?

Below is code that generates the data. The numbers are total nonsense of course, it’s just about the principle. It also includes some plotting and a linear regression with statsmodels as a sanity check. Then the pymc model, sampling and plotting of the results.

My main problem is that I get a posterior for each data point individually. But I simply want to see one for cars and trucks separately and ideally also for their difference. Such that I can conclude something like “After accounting for speed and weight, I am 90% sure the difference in fuel use is more than 5, 80% sure the difference is more than 10, 60% sure the difference is more than 20”.

Thank you for your time.

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import numpy as np

import pymc as pm

import arviz as az

import statsmodels.api as sm

import statsmodels.formula.api as smf

def generate_data():

np.random.seed(49213)

n = 100

npart = int(n/4) # 25 trucks, 75 cars

speed = np.random.normal(100, 5, n)

weight = np.random.normal(1000, 5, n)

weight[:npart] += 100 # trucks are heavier

fuelpersec = 0.5 * weight + 2 * speed + np.random.normal(0, 1, n)

fuelpersec[:npart] -= 20 # trucks have more efficient engines

df = pd.DataFrame({'weight':weight, 'speed':speed, 'fuelpersec':fuelpersec})

df['vehicle'] = 'car'

df.loc[:npart-1, 'vehicle'] = 'truck'

return df

df = generate_data()

f, axes = plt.subplots(1,2, figsize=[8,3])

sns.scatterplot(df, x='speed', y='fuelpersec', hue='vehicle', ax=axes[0])

sns.scatterplot(df, x='weight', y='fuelpersec', hue='vehicle', ax=axes[1])

# center the data

df['vehicle'] = df['vehicle'].replace({'car':0, 'truck':1})

cols = ['weight', 'speed', 'vehicle']

df[cols] = df[cols] - df[cols].mean(axis=0)

model = smf.ols(formula='fuelpersec ~ vehicle + speed + weight', data=df)

res = model.fit()

print(res.summary())

The coefs are as expected from the data generating. Trucks use 20 less fuel per sec when accounting for speed and weight.

with pm.Model(coords={'idx':df.index}) as model:

# data

speed = pm.MutableData('speed', df['speed'], dims='idx')

weight = pm.MutableData('weight', df['weight'], dims='idx')

vehicle = pm.MutableData('vehicle', df['vehicle'], dims='idx')

fuel = pm.MutableData('fuel', df['fuelpersec'], dims='idx')

# priors

intercept = pm.Normal("intercept", mu=700, sigma=10)

speed_mu = pm.Normal("speed_mu", mu=0, sigma=1)

weight_mu = pm.Normal("weight_mu", mu=0, sigma=1)

vehicle_mu = pm.Normal("vehicle_mu", mu=0, sigma=1)

sigma = pm.HalfNormal("sigma", sigma=1)

mu = pm.Deterministic('mu', intercept + speed_mu*speed + weight_mu*weight + vehicle_mu*vehicle)

# likelihood

Y_obs = pm.Normal("Y_obs", mu=mu, sigma=sigma, observed=fuel)

# sampling

idata = pm.sample_prior_predictive(samples=100, random_seed=0)

idata.extend(pm.sample(draws=1000, tune=1000, chains=2, random_seed=0))

idata.extend(pm.sample_posterior_predictive(idata, random_seed=0))

display(pm.model_to_graphviz(model))

display(az.plot_ppc(idata, group='prior'));

display(az.plot_ppc(idata, num_pp_samples=100));

display(az.summary(idata));