I have data for 36 studies with binomial data for 7 medical treatments. For each trial I have the number of patients, and the number who had an event of interest. Most of the studies have 2 arms, but the first study has 3 arms. I am trying to write code that treats the data the same way as what is in Technical support document 2 on the NICE website, and what is in the book “Network Meta-Analysis for Decision-Making”

I wrote code for two arms, but now I want to have it handle the one case with 3 arms. Latter I will have to add a bias correction for 3 arms trials, but for now I just want to add the single case with 3 arms.

This is not a missing data problem. I would appreciate any help or suggestions on how to handle the 3rd arm.

Hopefully the code below is better formatted than on my first try.

import arviz as az

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

import pymc as pm

import pytensor.tensor as pt

import pytensor

%matplotlib inline

mport pandas as pd

import io

data_string="""studyID,na,t1,t2,t3,r1,n1,r2,n2,r3,n3,study

1,3,1,3,4,1472,20251,652,10396,723,10374,GUSTO-1 1993

2,2,1,2,,3,65,3,64,,,ECSG 1985

3,2,1,2,,12,159,7,157,,,TIMI-1 1987

4,2,1,2,,7,85,4,86,,,PAIMS 1989

5,2,1,2,,10,135,5,135,,,White 1989

6,2,1,2,,887,10396,929,10372,,,GISSI-2 1990

7,2,1,2,,5,63,2,59,,,Cherng 1992

8,2,1,2,,1455,13780,1418,13746,,,ISIS-3 1992

9,2,1,2,,9,130,6,123,,,CI 1993

10,2,1,4,,4,107,6,109,,,KAMIT 1991

11,2,1,5,,285,3004,270,3006,,,INJECT 1995

12,2,1,7,,11,149,2,152,,,Zijlstra 1993

13,2,1,7,,1,50,3,50,,,Riberio 1993

14,2,1,7,,8,58,5,54,,,Grinfeld 1996

15,2,1,7,,1,53,1,47,,,Zijlstra 1997

16,2,1,7,,4,45,0,42,,,Akhras 1997

17,2,1,7,,14,99,7,101,,,Widimsky 2000

18,2,1,7,,9,41,3,46,,,DeBoer 2002

19,2,1,7,,42,421,29,429,,,Widimsky 2002

20,2,2,7,,2,44,3,46,,,DeWood 1990

21,2,2,7,,13,200,5,195,,,Grines 1993

22,2,2,7,,2,56,2,47,,,Gibbons 1993

23,2,3,5,,13,155,7,169,,,RAPID-2 1996

24,2,3,5,,356,4921,757,10138,,,GUSTO-3 1997

25,2,3,6,,522,8488,523,8461,,,ASSENT-2 1999

26,2,3,7,,3,55,1,55,,,Ribichini 1996

27,2,3,7,,10,94,3,95,,,Garcia 1997

28,2,3,7,,40,573,32,565,,,GUSTO-2 1997

29,2,3,7,,5,75,5,75,,,Vermeer 1999

30,2,3,7,,5,69,3,71,,,Schomig 2000

31,2,3,7,,2,61,3,62,,,LeMay 2001

32,2,3,7,,19,419,20,421,,,Bonnefoy 2002

33,2,3,7,,59,782,52,790,,,Andersen 2002

34,2,3,7,,5,81,2,81,,,Kastrati 2002

35,2,3,7,,16,226,12,225,,,Aversano 2002

36,2,3,7,,8,66,6,71,,,Grines 2002"""

data=pd.read_csv(io.StringIO(data_string),sep=",")

trials=data[["n1","n2"]]

events=data[["r1","r2"]]

trt=data[["t1","t2"]].to_numpy()-1

N=len(trials)

studies=data["study"]

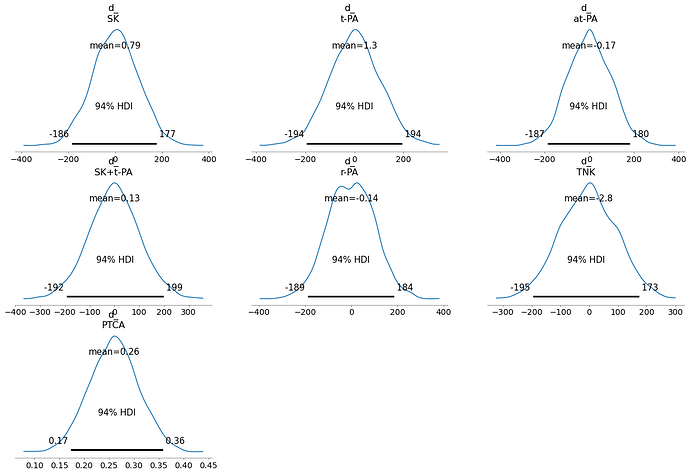

treatments=["SK","t-PA","at-PA","SK+t-PA","r-PA","TNK","PTCA"]

coords = {"studies": studies, "treatments":treatments}

reference=np.array([0,1,1,1,1,1,1])

with pm.Model(coords=coords) as femodel:

r=pm.MutableData('r',events)

n=pm.MutableData('n',trials)

t=pm.MutableData('t',trt)

d_=pm.Normal("d_",mu=0,sigma=100,dims="treatments")

d=pm.Deterministic("d",d_*reference,dims="treatments")

mu=pm.Normal("mu",mu=0,sigma=100,dims="studies",shape=(N,1))

dm=pm.Deterministic("dm",d[t])

linpred=pm.Deterministic("linpred",mu+dm)

p=pm.Deterministic("p",pm.invlogit(linpred))

y=pm.Binomial("y",n=n,p=p,observed=r)

fedata=pm.sample(10000,tune=5000, nuts_sampler="nutpie")

pm.model_to_graphviz(femodel2)

pm.model_to_graphviz(femodel2)