In the prophet framework, you can include additional variables exactly as normal. The benefit of Prophet is that it dispenses with the complicated Cox-Box statistical framework and just treats time series as a curve fitting exercise. The trend is just a piecewise linear function, and the season is just some trig functions. So there’s really nothing stopping you from just adding additional variables to the regression, but you need to be on guard against confounding between the trend and seasonal terms, and these additional terms. For example, if there is a trend or seasonal term in your explanatory variables, parameter estimates will not be identified.

Now, if you want inter-temporal dynamics (ARIMA terms), things get more complex, see this note by Rob Hyndman about how to include exogenous variables in the Cox-Box framework (SARIMAX). SARIMAX is not trivial in PyMC; one of the older hands will need to chime in if you are interested in that.

Here is a code estimating consumption based on the money supply using a Prophet-like setup. The example comes by way of the Statsmodel.api SARIMAX documentation. I’m on PyMC3, but everything in this snippet should be identical in PyMC4, just replace the theano import with aesara.

import pandas as pd

import requests

from io import BytesIO

import pymc3 as pm

import numpy as np

from theano import tensor as tt

from sklearn.preprocessing import StandardScaler

# Load data

friedman2 = requests.get('https://www.stata-press.com/data/r12/friedman2.dta').content

data = pd.read_stata(BytesIO(friedman2))

data.set_index('time', inplace=True)

data.index.freq = "QS-OCT"

# Slice and scale

scaler = StandardScaler()

data_slice = slice('1959', '1981')

use_data = data.loc[data_slice, ['consump', 'm2']].copy()

use_data.loc[:, :] = scaler.fit_transform(use_data.values)

y = use_data['consump'].copy()

X = (use_data.m2

.to_frame()

.assign(constant = 1)

.loc[data_slice, :])

# Set up Prophet features

n_changepoints = 5

N = 3

def make_fourier_features(t, P, N=3):

inner = 2 * np.pi * (1 + np.arange(N)) * t[:, None] / P

return np.c_[np.cos(inner), np.sin(inner)]

#Coords for the PyMC model

time_idxs, times = pd.factorize(y.index)

time_idxs = time_idxs.astype(float)

# Trend matrix

s = np.linspace(0, max(time_idxs), n_changepoints + 2)[1:-1]

A = (time_idxs[:, None] > s) * 1.

break_idxs = np.apply_along_axis(lambda x: np.flatnonzero(x)[0], axis=0, arr=A)

break_dates = [f'{x.year}Q{x.quarter}' for x in times[break_idxs]]

# Make fourier features, P=24 to match a six year business cycle in quarterly data

X_fourier = make_fourier_features(t=time_idxs, P=24, N=3)

X_exog = X['m2'].copy()

coords = dict(

time = times,

fourier_features = [f'{func}_{i+1}' for func in ['cos', 'sin'] for i in range(N)],

break_dates= break_dates,

exog = ['m2']

)

# Model

with pm.Model(coords = coords) as freidman_model:

k = pm.Normal('base_trend', mu = 0.0, sigma = 1.0)

m = pm.Normal('base_intercept', mu = 0.0, sigma = 1.0)

delta = pm.Normal('trend_offsets', mu=0.0, sigma=1.0, dims=['break_dates'])

beta_seasonal = pm.Normal('beta_seasonal', mu=0.0, sigma=1.0, dims=['fourier_features'])

beta_exog = pm.Normal('beta_exog', mu=0.0, sigma=1.0, dims=['exog'])

growth = (k + tt.dot(A, delta))

offset = m + tt.dot(A, (-s * delta))

trend = growth * time_idxs + offset

seasonality = tt.dot(X_fourier, beta_seasonal)

exogenous = X_exog.values * beta_exog

mu = trend + seasonality + exogenous

sigma = pm.HalfNormal('sigma', sigma=1.0)

likelihood = pm.Normal('y_hat',

mu = mu,

sigma = sigma,

observed = y)

# Sample

with freidman_model:

freidman_trace = pm.sample(cores= 6,

target_accept=0.9,

return_inferencedata=True)

Here are the results:

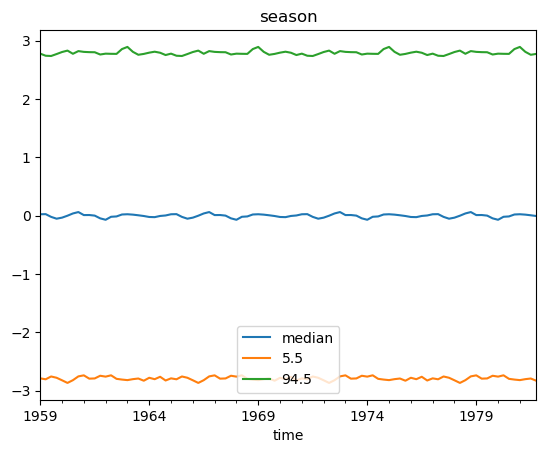

The fit looks good, but this is a very spurious regression and we’re basically just fitting to the (mostly linear) trend. If we were doing real modeling work, you would want to render consumption stationary before doing any of this. More importantly, the M2 money supply is essentially a second trend term. As a result, the estimates for base_trend, base_intercept, trend_offsets, and beta_exog are going to be biased. I re-ran the model taking two differences of the money supply (rendering it stationary via eyeball test, I didn’t bother to do something more formal). Here are plots comparing some posterior estimates:

Naively including the M2 money supply as an explanatory variable before checking it was stationary not only skunked our estimates of the trend and intercept, but it also flipped the sign of the effect of monetary policy on consumption. Obviously we would need to do many more tests to make sure this model is correctly specified. I certainly wouldn’t stake my reputation on it as-is.

So in conclusion, the Prophet framework is nice because you are just doing a standard linear regression using a piecewise linear trend and some fourier features as explanatory variables. This makes it easy to expand and include additional explanatory variables. Nevertheless, you can’t throw out all the stuff you learned in Time Series 101 just because you have a fancy model.