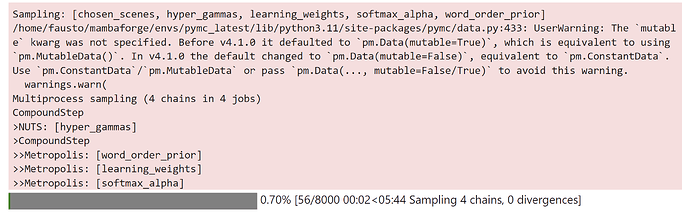

I updated pymc to the latest version and ran the model in this gist (should run as is). Unexpectedly, the Metropolist step is chosen for word_order_prior, learning_weights, and softmax_alpha:

This is despite the fact that they all have a continuous distribution & a previous version of pymc v5 automatically used hmc. Did something change that is causing this (unexpected to me) behaviour? Thanks for the help.

If it helps, here’s the output of:

for name, module in sorted(sys.modules.items()):

if hasattr(module, '__version__'):

print(f"{name}: {module.__version__}")

IPython: 8.12.0

IPython.core.release: 8.12.0

PIL: 9.5.0

PIL.Image: 9.5.0

PIL._deprecate: 9.5.0

PIL._version: 9.5.0

_csv: 1.0

_ctypes: 1.1.0

_curses: b'2.2'

_decimal: 1.70

_pydev_bundle.fsnotify: 0.1.5

_pydevd_frame_eval.vendored.bytecode: 0.13.0.dev

argparse: 1.1

arviz: 0.15.1

arviz.data.base: 0.15.1

backcall: 0.2.0

betterproto: 2.0.0b5

betterproto._version: 2.0.0b5

cachetools: 5.3.0

cffi: 1.15.1

clickhouse_driver: 0.2.6

cloudpickle: 2.2.1

comm: 0.1.3

cons: 0.4.5

csv: 1.0

ctypes: 1.1.0

cycler: 0.10.0

dateutil: 2.8.2

debugpy: 1.6.7

debugpy.public_api: 1.6.7

decimal: 1.70

decorator: 5.1.1

defusedxml: 0.7.1

distutils: 3.11.3

etuples: 0.3.8

executing: 1.2.0

executing.version: 1.2.0

fastprogress: 1.0.3

filelock: 3.12.0

filelock.version: 3.12.0

grpclib: 0.4.4

grpclib.metadata: 0.4.4

h2: 4.1.0

hagelkorn: 1.2.3

hpack: 4.0.0

http.server: 0.6

hyperframe: 6.0.1

ipaddress: 1.0

ipykernel: 6.22.0

ipykernel._version: 6.22.0

jedi: 0.18.2

json: 2.0.9

jupyter_client: 8.2.0

jupyter_client._version: 8.2.0

jupyter_core: 5.3.0

jupyter_core.version: 5.3.0

kiwisolver: 1.4.4

kiwisolver._cext: 1.4.4

llvmlite: 0.40.0

logging: 0.5.1.2

matplotlib: 3.7.1

matplotlib._version: 3.7.1

mcbackend: 0.5.0

mkl: 2.4.0

multidict: 6.0.4

multipledispatch: 0.6.0

numba: 0.57.0

numba.cloudpickle: 2.2.0

numba.misc.appdirs: 1.4.1

numpy: 1.24.3

numpy.core: 1.24.3

numpy.core._multiarray_umath: 3.1

numpy.lib: 1.24.3

numpy.linalg._umath_linalg: 0.1.5

numpy.version: 1.24.3

packaging: 23.1

pandas: 2.0.1

parso: 0.8.3

pexpect: 4.8.0

pickleshare: 0.7.5

pkg_resources._vendor.more_itertools: 9.0.0

pkg_resources._vendor.packaging: 23.0

pkg_resources._vendor.platformdirs: 2.6.2

pkg_resources._vendor.platformdirs.version: 2.6.2

pkg_resources.extern.more_itertools: 9.0.0

pkg_resources.extern.packaging: 23.0

pkg_resources.extern.platformdirs: 2.6.2

platform: 1.0.8

platformdirs: 3.5.0

platformdirs.version: 3.5.0

prompt_toolkit: 3.0.38

psutil: 5.9.5

ptyprocess: 0.7.0

pure_eval: 0.2.2

pure_eval.version: 0.2.2

pydevd: 2.9.5

pygments: 2.15.1

pymc: 5.9.0

pyparsing: 3.0.9

pytensor: 2.17.3

pytz: 2023.3

re: 2.2.1

scipy: 1.10.1

scipy._lib._uarray: 0.8.8.dev0+aa94c5a4.scipy

scipy._lib.decorator: 4.0.5

scipy.integrate._dop: 1.23.5

scipy.integrate._lsoda: 1.23.5

scipy.integrate._vode: 1.23.5

scipy.interpolate.dfitpack: 1.23.5

scipy.linalg._fblas: 1.23.5

scipy.linalg._flapack: 1.23.5

scipy.linalg._flinalg: 1.23.5

scipy.linalg._interpolative: 1.23.5

scipy.optimize.__nnls: 1.23.5

scipy.optimize._cobyla: 1.23.5

scipy.optimize._lbfgsb: 1.23.5

scipy.optimize._minpack2: 1.23.5

scipy.optimize._slsqp: 1.23.5

scipy.sparse.linalg._eigen.arpack._arpack: 1.23.5

scipy.sparse.linalg._isolve._iterative: 1.23.5

scipy.special._specfun: 1.23.5

scipy.stats._mvn: 1.23.5

scipy.stats._statlib: 1.23.5

setuptools: 67.7.2

setuptools._distutils: 3.11.3

setuptools._vendor.more_itertools: 8.8.0

setuptools._vendor.ordered_set: 3.1

setuptools._vendor.packaging: 23.0

setuptools.extern.more_itertools: 8.8.0

setuptools.extern.ordered_set: 3.1

setuptools.extern.packaging: 23.0

setuptools.version: 67.7.2

six: 1.16.0

socketserver: 0.4

stack_data: 0.6.2

stack_data.version: 0.6.2

traitlets: 5.9.0

traitlets._version: 5.9.0

unification: 0.4.5

urllib.request: 3.11

wcwidth: 0.2.6

xarray: 2023.4.2

xarray_einstats: 0.5.1

xmlrpc.client: 3.11

yaml: 6.0

zlib: 1.0

zmq: 25.0.2

zmq.sugar: 25.0.2

zmq.sugar.version: 25.0.2