I’m trying to understand how to use correlated priors for the varying intercepts and slopes in mixed models because I would like to incorporate that into Bambi. I’m using the famous sleepstudy dataset. This post aims mainly to check whether what I’m doing is correct and/or check if there are better alternatives.

First of all, I fit the model using independent priors.

# Libraries

import arviz as az

import pandas as pd

import pymc3 as pm

import theano.tensor as tt

from formulae import design_matrices

# Read data

data = pd.read_csv(

"https://raw.githubusercontent.com/vincentarelbundock/Rdatasets/master/csv/lme4/sleepstudy.csv",

index_col = 0

)

# Get design matrices for common and group-specific parts

dm = design_matrices("Reaction ~ Days + (Days|Subject)", data)

X = dm.common.design_matrix

Z_0 = dm.group["1|Subject"]

Z_slope = dm.group["Days|Subject"]

# Just the mean of the prior for the intercept

β_0_mean = data["Reaction"].mean()

# Model 1:Independent priors for varying intercept and slopes

with pm.Model() as model:

# Common part

β_0 = pm.HalfNormal("β_0", sigma=100)

β_days = pm.Normal("β_days", mu=0, sigma=100)

β = tt.stack(β_0, β_days)

# Group-specific part, with non-centered parametrization

# Intercept

b_0_σ = pm.HalfNormal("b_0_σ", sigma=100)

b_0_offset = pm.Normal("b_0_offset", mu=0, sigma=1, shape=Z_0.shape[1])

b_0 = pm.Deterministic("b_0", b_0_offset * b_0_σ)

# Slope

b_days_σ = pm.HalfNormal("b_days_σ", sigma=100)

b_days_offset = pm.Normal("b_days_offset", mu=0, sigma=1, shape=Z_slope.shape[1])

b_days = pm.Deterministic("b_days", b_days_offset * b_days_σ)

# Conditional mean

μ = pm.math.dot(X, β) + pm.math.dot(Z_0, b_0) + pm.math.dot(Z_slope, b_days)

σ = pm.HalfNormal("σ", sigma=100)

y = pm.Normal('y', mu=μ, sigma=σ, observed=data.Reaction)

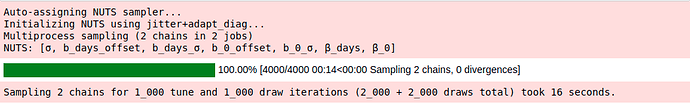

idata = pm.sample(return_inferencedata=True)

And everything works great.

But here’s the thing when I go to model 2

coords = {

"Subject": data["Subject"].unique(),

"Effect": ["Intercept", "Slope"]

}

with pm.Model(coords=coords) as model_2:

# Common part

β_0 = pm.HalfNormal("β_0", sigma=100)

β_days = pm.Normal("β_days", mu=0, sigma=100)

β = tt.stack(β_0, β_days)

# Group-specific part

b_σ = pm.HalfNormal.dist(sigma=100, dims="Effect")

chol, _, _ = pm.LKJCholeskyCov('chol', n=2, eta=1, sd_dist=b_σ, compute_corr=True)

b_raw = pm.Normal('b_raw', mu=0, sigma=1, dims=("Subject", "Effect"))

b = pm.Deterministic('b', tt.dot(chol, b_raw.T).T, dims=("Subject", "Effect"))

mu = pm.math.dot(X, β) + pm.math.dot(Z_0, b[:, 0]) + pm.math.dot(Z_slope, b[:, 1])

σ = pm.HalfNormal("σ", sigma=100)

y = pm.Normal('y', mu=mu, sigma=σ, observed=data.Reaction)

idata = pm.sample(return_inferencedata=True)

It works, but slower, and sometimes I get divergences… I was expecting the opposite.

- Anyone knows if my approach using the LKJCholeskyCov could be improved?

- Is it possible to rename names derived from

chol, likechol_stds, and only keep non-redundant and non-constant dimensions? That causes problems when callingaz.plot_trace()withchol_coorrvariable name, because of the correlations that are equal to 1. For example, I would like to havechol_stds[0]→b_sigma[Interecept]andchol_stds[1]→b_sigma[Slope]. - I also tried passing

dimstopm.LKJCholeskyCovto obtain labeled coordinates, but there’s no effect. Is it possible to have labeled dimensions when usingpm.LKJCholeskyCov?

I don’t include the trace plot where I use az.plot_trace(idata, var_names="chol_corr"). But I get a lot of warning messages because of the 1 correlations.