Hi,

I am currently working with a code which aims to infer knowledge about gamma-rays. Therefore I need many bins so the signal shows. The model I have for this is:

import pymc3 as mc from theano import tensor as T import theano; theano.gof.cc.get_module_cache().clear() trace_out = [] resmat = resp # response matix of the detector truthdim = len(resmat) prior_upper = 5550*np.ones(len(raw_py)) # 5550 is the highest event in the raw-data prior_lower = np.zeros(len(raw_py)) with mc.Model(): priors = { } def wrapper(priorname='',low=[],up=[],other_args={},optimized=False): if (priorname in priors): priormethod = priors[priorname] elif (hasattr(mc,priorname)): priormethod = getattr(mc,priorname) else: print( 'WARNING: prior name not found! Falling back to DiscreteUniform...' ) priormethod = mc.DiscreteUniform truthprior = [] for bin,(l,u) in enumerate(zip(low,up)): name = 'truth%d'%bin default_args = dict(name=name,mu=l,sigma=u) args = dict(list(default_args.items())+list(other_args.items())) #print(args) prior = priormethod(**args) truthprior.append(prior) return mc.math.stack(truthprior) truth = wrapper('DiscreteUniform', prior_lower, prior_upper) def unfold(): tresmat = np.array(resmat) reco = theano.dot(truth, tresmat) out = reco return out unfolded = mc.Poisson('unfolded', mu=unfold(), observed=np.array(raw_py)) trace = mc.sample(2000, tune=1000, cores=1, chains=2, nuts_kwargs=None, discard_tuned_samples=True, progressbar=True)

The problems I am having is:

1: Theano constantly give me the cache warning:

INFO (theano.gof.compilelock): Refreshing lock /home/user/.theano/compiledir_Linux-4.15–generic-x86_64-with-debian-buster-sid-x86_64-3.7.4-64/lock_dir/lock

2: I need a posterior for each bin, and I need to have up to 4000 bins in some cases, to be able to infer the information needed. I think this is making the sampling slow. Yesterday I had 1.3 samplings per second and the whole process took 5 hours for 20 000 samples, and that is only for 512 bins. Am I doing anything wrong?

3: If I have over 800 bins and a lot of samples it crashes after a long time. I will add a picture of the error:

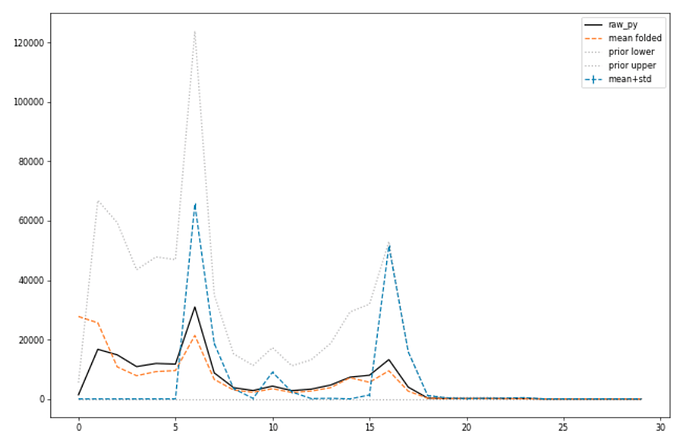

More info: The data is counts of gamma-rays. So it can be many hundred thousand events in one spectrum. In many cases the data is skewed, with many zeros and just above zero and some events reaching very high.

All help is much appreciated!