Referring to the link : pymc.CustomDist — PyMC dev documentation

Provide a random function that return numerical draws. This allows one to use a CustomDist in prior and posterior predictive sampling.

from typing import Optional, Tuple

import numpy as np

import pymc as pm

from pytensor.tensor import TensorVariable

def logp(value: TensorVariable, mu: TensorVariable) -> TensorVariable:

return -(value - mu)**2

def random(

mu: np.ndarray | float,

rng: Optional[np.random.Generator] = None,

size : Optional[Tuple[int]]=None,

) -> np.ndarray | float :

return rng.normal(loc=mu, scale=1, size=size)

with pm.Model():

mu = pm.Normal('mu', 0 , 1)

pm.CustomDist(

'custom_dist',

mu,

logp=logp,

random=random,

observed=np.random.randn(100, 3),

size=(100, 3),

)

prior = pm.sample_prior_predictive(10)

Could someone please explain how the above works? I am not able to wrap my head around how -

- sample prior predictive works

- how are we using customDist to sample_prior_predictive

When you define a PyMC model you define a generative model (think of it as chained np.random.foo functions), that can be evaluated via forward sampling to obtain random draws. You take draws from the top variables and then pass it to the children and so on, until there are no variables left to evaluate.

This notebook goes a bit over the internals: PyMC and PyTensor — PyMC 5.10.0 documentation and this outdated notebook shows a simple re-implementation of sample_prior_predictive from scratch: Google Colab

If you provide either a random or dist functions to CustomDist those will be used for forward sampling in prior_predictive. As I mentioned a PyMC model is just a (symbolic) chain of np.random.foo calls, and your random function is one more in there.

Let me know if this is too hand-wavy and you need more precise pointers.

Hi @ricardoV94

Thanks for responding. I have little to no experience in PYMC and I am trying to get into deep waters here. I am trying to implement a cox proportional hazards model for competing risks.

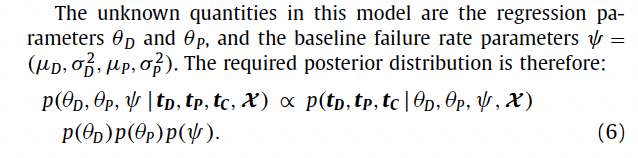

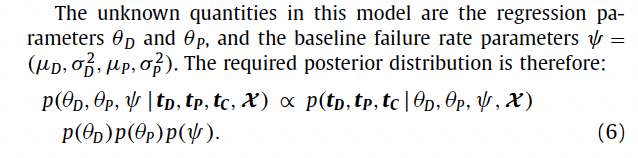

I have specified the model and it is sampling. I am estimating mean and standard deviation of the baseline hazard rate and regression parameters in proportional hazards. My problem looks like this

I have a custom likelihood that I have implemented.

While sampling I can see divergences. Also the parameters mean and standard deviation have almost constant values in the generated samples in respective chains, so the chains look stuck.

I have a dataset which has approx 1.2M records spanning from 2013-19. I have random sampled it down to 60k for testing the model.

For diagnostics, I dont have a clue where to start. @ricardoV94 if you could suggest something?

In general, a good place to start is to generate synthetic data that faithfully reflects the model you are using for inference. Generating a (relatively) small amount of data allows you to iterate quickly. Checking posteriors against (known) true parameter values allows you to figure out if the model is what you want it to be. Jumping straight to big/real data sets can be very tricky, even for those who have been doing this sort of thing for a while.

If you haven’t already, I would suggest taking a look at this notebook which, though not updated to PyMC v5, may prove useful.

Hi @cluhmann

Thanks for responding. That is exactly what I am trying to figure by learning about how sample_prior_predictive works. The way my problem is designed, I dont have the pdf of the model that I am using. Therefore I am not able to generate synthetic data from the process. My understanding is, you fix the parameters of the model, generate samples from the process, this becomes your ground truth. And then you run your MCMC sampler with observed=groundTruth, to see if the parameter samples are close to what you chose in the to generate the ground truth.

Basically my model is a cox proportional hazards model :

The baseline time to failure ~ LogNormal(mu,sigma) - if this was the only part in the model, I could have generated synthetic data(observed values) from this. But its the second multiplicative term due to which I am not able to generate synthetic data.

Trying to see how sample_prior_predictive can help in this situation

You can definitely use your model to generate synthetic data. However, I often find that generating it via an independent “model” (e.g., something hand-coded in numpy, etc.) is useful because it is often the case that there’s uncertainty about whether the model is “correct” (i.e., what you want). If your model isn’t yet there, then using it to generate data means you aren’t quite sure what your (synthetic) data is either.

That being said, you can check out this post to see some suggestions about how to use PyMC models to generate data.

@cluhmann could you please elaborate more on this?

If your model isn’t yet there, then using it to generate data means you aren’t quite sure what your (synthetic) data is either.

Meaning, if I don’t know whether my model is working the way I want it to, and I use it to generate synthetic data, then I don’t really understand that data any more than I understand my model. If I then fit my model to that data and it “works”, it’s unclear what exactly I have demonstrated/accomplished. In contrast, if I can code up exactly what I am thinking in numpy, etc. then I can be more confident that my data accurately reflected the generative process I wish to model. Building that same logic into a PyMC model (or trying to) can then evaluated by fitting the (maybe correct, maybe incorrect) model to the data (from the known generative process). If it fits well and recovers the parameter true/known values, that gives you some indication that your PyMC model reflects what you intended it to.

2 Likes

@cluhmann Totally makes sense now. Thanks a lot for that !

I’ll find a way to reproduce the process in some numpy way and generate data from there.

1 Like

Take my suggestion as just that: a suggestion. If you aren’t going to be more confident in a numpy-generated data set than you would be in a PyMC-generated data set, none of what I said above really applies. But given that you said you had less experience with PyMC, you might want to start somewhere you feel a bit more confidence and then use that data set (that you are more confident in) to test how your PyMC model is doing.

2 Likes