Hi!

I am trying to implement Bayesian Learner Model of Tim Behrens. It’s a model like this:

Behrens infer some parameters in our brain changed over time.

y is the choice of subjects and r is the proportion if y == 1.

And the update rule of parameters follows the Bayesian rule like this:

and

One could find out the definition of this model on pages 5-6 of this appendix.

I meet SamplingError over time. And the most confusing problem is how to share the parameters of multi RVs between steps.

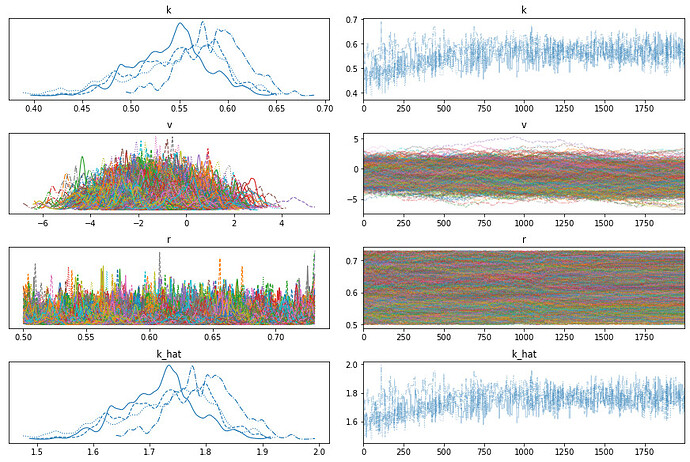

Here is my newest code (So many thanks to @ricardoV94 ):

import pymc3 as pm

import theano.tensor as tt

import pandas as pd

import numpy as np

from scipy import stats

# Create the test data

p = np.repeat([.75, .75, .75, .25, .75, .25], 100)

env= stats.binom.rvs(1, p)

# Modeling

with pm.Model() as bayesian_lerner_model:

k = pm.Normal("k", mu = 1, sigma = 1, testval = 0.6)

k_ = pm.Deterministic('k_cap', pm.math.exp(k))

v = pm.GaussianRandomWalk("v", mu = 0.7, sigma = k_, testval = 0.05, shape = len(env))

v_ = pm.Deterministic('v_cap', pm.math.exp(v))

r = []

for ti in range(len(env)):

if ti == 0:

# Testvals are used to prevent -inf initial probability

r.append(pm.Beta(f'r{ti}', 1, 1))

else:

w = r[ti-1]

k = 1 / v_[ti-1]

r.append(pm.Beta(f'r{ti}', alpha=w*(k-2) + 1, beta=(1-w)*(k-2) + 1, testval = 0.5))

r = pm.Deterministic('r', pm.math.stack(r))

y = pm.Bernoulli("y", p = r, observed = env)

trace = pm.sample(return_inferencedata=True)

As the comparison, Michael Waskom implements the model by Numpy. Here’s his code:

"""Python implementation of a Bayesian probabilistic learner.

This model was originally described in Behrens et al. Nat Neuro 2007.

The code here was adapted from the original C++ code provided by

Tim Behrens.

"""

class ProbabilityLearner(object):

def __init__(self, p_step=.01, I_step=.1):

# Set up the parameter grids

p_grid = make_grid(.01, .99, p_step)

self.p_grid = p_grid

I_grid = make_grid(log(2), log(10000), I_step)

self.I_grid = I_grid

self._p_size = p_grid.size

self._I_size = I_grid.size

# Set up the transitional distribution on p

joint_grid = np.meshgrid(p_grid, p_grid, I_grid, indexing="ij")

p_trans = np.vectorize(p_trans_func)(*joint_grid)

self._p_trans = p_trans / p_trans.sum(axis=0)

# Initialize the learner and history

self.reset()

@property

def p_hats(self):

return np.atleast_1d(self._p_hats)

@property

def I_hats(self):

return np.atleast_1d(self._I_hats)

@property

def data(self):

return np.atleast_1d(self._data)

def fit(self, data):

"""Fit the model to a sequence of Bernoulli observations."""

if np.isscalar(data):

data = [data]

for y in data:

self._update(y)

pI = self.pI

self.p_dists.append(pI.sum(axis=1))

self.I_dists.append(pI.sum(axis=0))

self._p_hats.append(np.sum(self.p_dists[-1] * self.p_grid))

self._I_hats.append(np.sum(self.I_dists[-1] * self.I_grid))

self._data.append(y)

def _update(self, y):

"""Perform the Bayesian update for a trial based on y."""

# Information leak (increase in the variance of the joint

# distribution to reflect uncertainty of a new trial)

# -------------------------------------------------------

pI = self.pI

# Multiply P(p_p+1 | p_i, I) by P(p_i, I) and

# integrate out p_i, which gives P(p_i+1, I)

# pI = (self._p_trans * pI).sum(axis=1)

# Equivalent but twice as fast:

pI = np.einsum("ijk,jk->ik", self._p_trans, pI)

# Update P(p_i+1, I) based on the newly observed data

# ----------------------------------------------------------

likelihood = self.p_grid if y else 1 - self.p_grid

pI *= likelihood[:, np.newaxis]

# Normalize the new distribution

# ------------------------------

self.pI = pI / pI.sum()

def make_grid(start, stop, step):

"""Define an even grid over a parameter space."""

count = (stop - start) / step + 1

return np.linspace(start, stop, count)

def I_trans_func(I_p1, I, k):

"""I_p1 is normal with mean I and std dev k."""

var = exp(k * 2)

pdf = exp(-.5 * power(I - I_p1, 2) / var)

pdf *= power(2 * pi * var, -0.5)

return pdf

def p_trans_func(p_p1, p, I_p1):

"""p_p1 is beta with mean p and precision I_p1."""

a = 1 + exp(I_p1) * p

b = 1 + exp(I_p1) * (1 - p)

if 0 < p_p1 < 1:

logkerna = (a - 1) * log(p_p1)

logkernb = (b - 1) * log(1 - p_p1)

betaln_ab = gammaln(a) + gammaln(b) - gammaln(a + b)

return exp(logkerna + logkernb - betaln_ab)

else:

return 0

As a newbie, I have no idea which part of my code is wrong. So I am looking forward to your help.

Thanks!