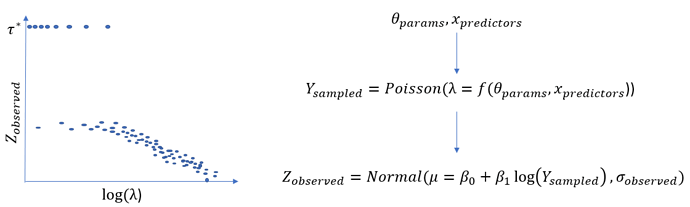

I have a physical model where a discrete sample, Y_{sample}, is taken (poisson process), but then that sample is measured via a separate process as Z_{observed} which is dependent on the log of the poisson RV Y_{sample} with some gaussian noise. In the latter process, Y_{sampled}==0 from the poisson sampling have Z_{observed} measured as \tau*, but Y_{sampled}>0 has Z_{observed} measured as continuous, and is sampled from a normal distribution around linear function of log(Y_{sampled}).

I’d like to infer \lambda, or rather \theta which leads to \lambda, but I’m having trouble breaking this down into the correct likelihood for Z_{observed}. I think I can split the Z_{observed} into Z_{obs,\tau*}=Z_{observed}[Z_{observed} == \tau^*] which has likelihood given by the Poisson distribution directly. But for the rest of the data, Z_{obs,else}, how should I handle? It seems like I wouldn’t want to use a zero-truncated poisson for this, because that artificially inflates the probability of non-zero $Y_{sampled}, when zero was actually a possibility, just not one that I observed in the Z_{obs,else} array. It seems like I need some way to take the Y_{sampled} RV and truncate it so as not to pass a zero to log() in the Normal likelihood.

Thoughts?

Thanks in Advance.