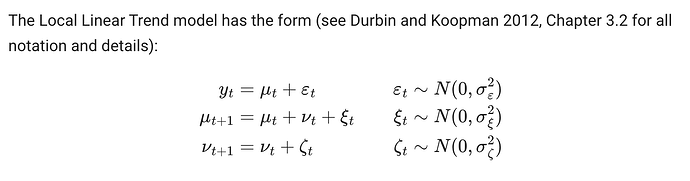

I am trying to code a smooth local linear trend model (a local linear trend model with level innovations disabled):

y[t] = level[t] + obs_err

level[t+1] = level[t] + trend[t]

trend[t+1] = trend[t] + trend_err (not as I wrote before trend[t+1] = trend[t+1] + trend_err)

with initial values level[0] and trend[0]. Thus, we have: y[0] = level[0]+obs_err

Using scan and a customDist, would the following implementation be correct? Notice that y is updated at the beginning in ll_step contrary to the many examples I saw in this blog where y is usually updated at the end.

lags = 1

timeseries_length = 100

def ll_step(*args):

level_tm1, trend_tm1, trend_sigma, obs_sigma = args

y = pm.Normal.dist(level_tm1, obs_sigma)

trend = trend_tm1 + pm.Normal.dist(0, trend_sigma)

level = level_tm1 + trend_tm1

# This is what I saw in some other similar examples

# y = pm.Normal.dist(level, obs_sigma)

return (level, trend, y), collect_default_updates(inputs=args, outputs=[level, trend, y])

def ll_dist(level0, trend0, trend_sigma, obs_sigma, size):

(level, trend, y), _ = pytensor.scan(

fn=ll_step,

outputs_info = [level0, trend0, None],

non_sequences=[trend_sigma, obs_sigma],

n_steps=timeseries_length - lags,

strict=True,

)

return y

coords = {

"order": ["level", "trend"],

"lags": range(-lags, 0),

"steps": range(timeseries_length - lags),

"timeseries_length": range(timeseries_length),

}

with pm.Model(coords=coords, check_bounds=False) as model:

# Priors

trend_sigma = pm.Gamma("trend_sigma", alpha=2, beta=50)

# Hyperpriors for the means and stds

level_mu0 = pm.Normal("level_mu0", mu=0, sigma=1)

level_sigma0 = pm.Gamma("level_sigma0", alpha=5, beta=5)

trend_mu0 = pm.Normal("trend_mu0", mu=0, sigma=1)

trend_sigma0 = pm.Gamma("trend_sigma0", alpha=5, beta=5)

# Priors with hyperpriors

level0 = pm.Normal("level0", mu=level_mu0, sigma=level_sigma0)

trend0 = pm.Normal("trend0", mu=trend_mu0, sigma=trend_sigma0)

obs_sigma = pm.HalfNormal("obs_sigma", sigma=0.05)

ll_steps = pm.CustomDist(

"ll_steps",

level0,

trend0,

trend_sigma,

obs_sigma,

dist=ll_dist,

dims=("steps",),

)

pm.model_to_graphviz(model)